The metrics of science back to front and inside out

Gabriel Vélez Cuartas

With no doubt whatsoever there’s a conflict between citation and indexing systems and social sciences and humanities. Everyone knows the criticism to traditional scientometrician models that don’t consider the contributions made by this area: coverage problems (Torres and Delgado, 2013; Harzing, 2013; Miguel, 2011), a lack of instruments to measure the present things (Romero, Acosta, Tejada, 2013; DORA, 2012), access to national information (Marques, 2015), inappropriate bibliometric tools for humanities (Beigel, 2018; Gómez Morales, 2015; Žic Fuchs, 2014), the need to extend science assessment criteria (Wouters & Hicks, 2015) or the difficulties of concise indices for assessment (Sanz Casado et al, 2013).

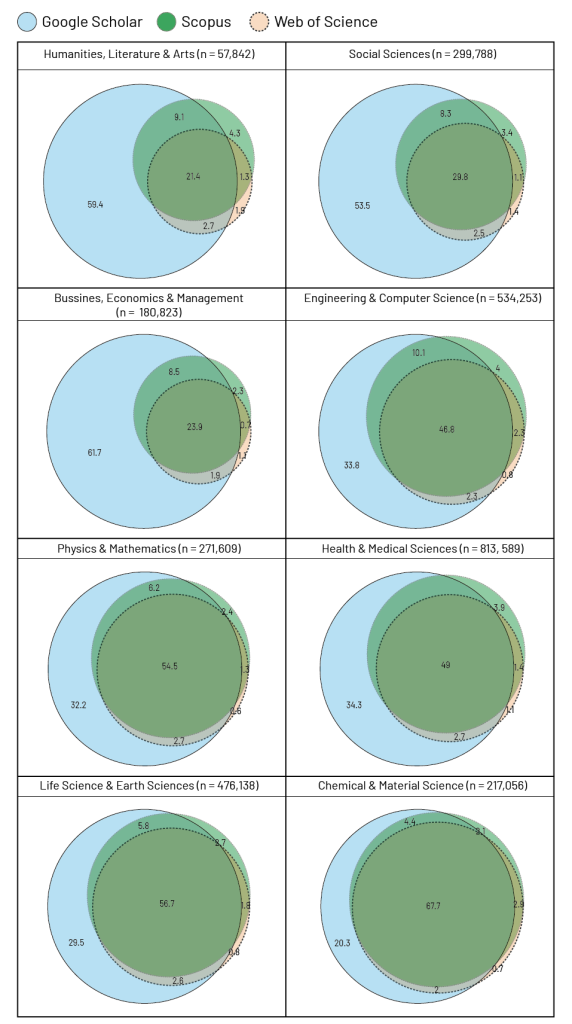

A significant example of the limits of valuation done by the most used instruments for assessment of scientific production (Impact Factor and SJR) is seen in a study that compares citations per article in WoS, Scopus and Google Scholar (Martín-Martín et al, 2018). This study demonstrates that in social sciences, humanities and arts, economy and business more than 50% of citations are not considered by the results of WoS and Scopus (see figure 1). Google Scholar seems to be a model closer to that of social sciences and humanities.

Figure 1.

Percentage of unique and overlapped citations in Google Scholar, Scopus and Web of Science per subject field of cited documents.

Source: Martín-Martín, A., Orduna-Malea, E., Thelwall, M., & Delgado-López-Cózar, E. 2018.

Nevertheless, it should be noted that metrics have become an annoying noise for the development of the various fields of knowledge in social sciences and humanities. Metrics are seen with suspicion, credibility on the effects of strengthening scholarly communities is limited to researchers of the field only. Great efforts are made to publish in WoS and Scopus journals with poor rewards, ignoring the real impact on other colleagues’ work, many states of the art include publications that may or may not be highly cited, the expected results in terms of funding projects are poor, confusion regarding the ways of positioning in the field and true innovative approaches is generalised.

In terms of publications, Latin America is excluded from international indices, and therefore, from their institutions’ focal point. The region has developed its communication potential from university journals that, in most cases, attain their visibility from school and department networks, but not due the support of learned societies that enable them become stages for national and international discussion. Governmental support to the development of such efforts is reduced to their assessment with criteria proposed by international publishing houses or private consortia such as Elsevier, Clarivate or Google (in the best-case scenario, which are few). If we consider that an important part—upwards of 80% journals in such databases—come from great publishing firms (Vélez, Lucio, Leydesdorff, 2016) the debate is determined by the dichotomy between publishing, especially, in European and North American journals, or strengthening the national industry. International standardisation is attained on the one hand; on the other, self-determination. For social sciences and humanities this represents a significant aspect considering the importance of regional developments, of the development of an internal community for Latin America, and the differential growth patterns of their production (Engels et al, 2012; Bornmann et al, 2010), but also the impact attained in journals outside Scopus and WoS as shown by Martín-Martín et al (2018). In other words, medicine needs global communication spaces due the possible generalisation of its results, social sciences and humanities have to discuss regional matters such as public policies, regional history, or the organization of heritage according to their own criteria without forgetting the international dialogue.

In this scenario, metrics for social sciences and humanities need an urgent creative reformulation. On the one hand, they have to respond to the need of researchers in their field. On the other, they have to make visible those publications that are not visible. Challenges must differ from the reformulation of equations about the same data, the same databases. Perhaps this is about new indices. But more important are the questions that lead to measures, the consistency with expected results of them who are measured. But above all, about returning to the basics of our communities, to the fundamental questions: Why do researchers write? For whom? How do they want to strengthen their communities? What are the paths to communicate knowledge? Once a baseline is established, creative solutions, perhaps, will be found to guide the work.

To think of sciences as the result of the institutionalisation of their communication results in increasing processes of expertise in certain domain, knowledge dissemination in multiple sources, holding conversations with various academic and non-academic stakeholders and the consolidation of communities. One way or another there’s an open competence to contribute to problem-solving, but not necessarily competing against one single centre. Heterarchies are produced, networks with multiple centres and peripheries and not just distributing production in exact impact quartiles, which is a measure contrary to the multiple scale-free networks that may be found in different fields of knowledge. There may be other measures:

- Observing the set of production in as many global and regional databases as possible: Redalyc, Scopus, Scielo, WoS…

- Creating knowledge maps that guide new researches where institutions and expert researchers may be easily located.

- Widening the base to observe reference and citation of other forms of production such as books, means of communication, social networks in order to discover the usage of research in different forms of production and which is intended for different audiences.

- Identifying the usage of open access sources in contrast to closed publications or with APCs.

- Observing the growth of subject communities and institutional and interinstitutional research programs.

- Discovering the collaboration with non-academic sectors in building knowledge and the participation of different sectors in developing research.

All in all, metrics that answer the questions about the work of researchers instead of knowing whether one is farther or closer to the forms of production of particle physics or inmunovirology or to the impact of Nature or Science. In this context it’s worth returning to the questions. It is convenient for AmeliCA, in its birth, and above all, to begin a real dialogue with the community, to ask researchers what would they like to see when their own production is measured? What kind of goals and objectives do they have that may be monitored by some metrics? This is the open-ended question.

Gomez-Morales, Y, J. (2015). Usos y abusos de la bibliometría. Revista Colombiana de Antropología, 51(1), 291-307.Harzing, A, W. (2013). Document categories in the ISI Web of Knowledge: Misunderstanding the Social Sciences? Scientometrics, 94, 23–34. DOI 10.1007/s11192-012-0738-1Marques, F. (2015). Registros Valiosos. Pesquisa FAPESP, 233, 34-37.

Martín-Martín, A., Orduna-Malea, E., Thelwall, M., & Delgado-López-Cózar, E. (2018). Google Scholar, Web of Science, and Scopus: a systematic comparison of citations in 252 subject categories. arXiv:1808.05053v1. DOI: 10.31235/osf.io/42nkm

Miguel, S. (2011). Revistas y producción científica de América Latina y el Caribe: su visibilidad en SciELO, RedALyC y SCOPUS. Revista Interamericana de Bibliotecología, 34(2), 187-199.

Romero-Torres, M., Acosta-Moreno, L.A., & Tejada-Gómez, M.A. (2013). Ranking de revistas científicas en Latinoamérica mediante el índice h: estudio de caso Colombia. Revista Española de Documentación Científica, 36(1). doi: http://dx.doi.org/10.3989/redc.2013.1.876

San Francisco Declaration on Research Assessment- DORA (2013). Retrieved from http://www.ascb.org/SFdeclaration.html

Sanz-Casado, E., García-Zorita, C., Serrano-López, A. E., Efraín-García, P., & De Filipo, D. (2013). Rankings nacionales elaborados a partir de múltiples indicadores frente a los de índices sintéticos. Revista Española de Documentación Científica, 36(3). doi: http://dx.doi.org/10.3989/redc.2013.3.1.023

Torres-Salinas, D. & Delgado-López-Cózar, E. (2013). “Cobertura de las editoriales científicas del Book citation index en ciencias sociales y humanidades: ¿la historia se repite?”. Anuario ThinkEPI, 7, 110-113.

Vélez-Cuartas, G., Lucio-Arias, D., & Leydesdorff, L. (2016). “Regional and global science: Publications from Latin America and the Caribbean in the SciELO Citation Index and the Web of Science”. El profesional de la información, 25(1), 35-46.

Wouter, P. & Hicks, D. (2015). The Leiden Manifesto for research metrics. Nature 520, April, 429-531.

Žic Fuchs, M. (2014). Bibliometrics use and abuse in the humanities. Portland press LTD. Retrieved from http://www.portlandpress.com/pp/books/online/wg87/087/0107/0870107.pdf